AI Cheating Tool Startup Raises $5.3M After Columbia Student Suspension

AI Cheating Tool Developed by Columbia Student Secures $5.3M in Funding

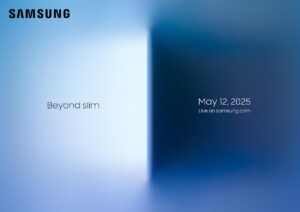

Cluely’s AI cheating tool offers an undetectable in-browser window that assists users during interviews, exams, and other scenarios. (Image: Cluely)

A controversial AI cheating tool has secured $5.3 million in seed funding despite its founder being suspended from Columbia University for creating the technology. Twenty-one-year-old Chungin “Roy” Lee announced on Sunday that his startup, Cluely, received the substantial investment from Abstract Ventures and Susa Ventures to further develop the AI cheating tool designed to “cheat on everything” from job interviews to exams and sales calls.

Table of Contents

The Origin Story: From University Suspension to Funded Startup

The journey of this AI cheating tool began when Lee and his co-founder Neel Shanmugam, both 21-year-old Columbia University students, developed a tool initially called “Interview Coder” to help software engineers cheat during job interviews. This controversial creation led to disciplinary action by Columbia University, with both students ultimately dropping out amid the proceedings, according to Columbia’s student newspaper.

Lee and Shanmugam develop the initial version of the AI cheating tool focused on helping developers bypass LeetCode-style interview questions

Lee claims to have secured an Amazon internship using the tool (Amazon has stated that candidates must acknowledge they won’t use unauthorized tools during interviews)

Columbia University initiates disciplinary proceedings against both students for creating the AI cheating tool

Columbia’s student newspaper reports that both students have dropped out amid the controversy

Lee announces $5.3 million in seed funding for Cluely, the formal startup built around the AI cheating tool

In a viral X thread, Lee shared his story of being suspended by the university for developing the controversial technology. Rather than deterring the young entrepreneurs, this academic setback seems to have accelerated their business ambitions, transforming what began as a tool for bypassing technical interviews into a San Francisco-based startup with significant venture backing.

How the AI Cheating Tool Works

The AI cheating tool, now marketed under the Cluely brand, operates through a hidden in-browser window that remains invisible to the person conducting the interview or administering the test. This covert assistance allows users to receive real-time AI help without detection, essentially creating an invisible AI coach that provides answers, suggestions, and information during high-stakes situations.

Key Features of the AI Cheating Tool

- Invisible Interface: Operates in a hidden browser window that can’t be detected by interviewers or test administrators

- Real-time Assistance: Provides on-the-fly help during interviews, exams, and other scenarios

- Versatile Applications: Originally designed for coding interviews but now marketed for various scenarios including sales calls and even dates

- Zero Footprint: Leaves no visible trace of assistance, making it virtually undetectable through conventional monitoring methods

Cluely has published a manifesto on their website, drawing parallels between their AI cheating tool and historical technologies like calculators and spellcheck, which were initially criticized as forms of “cheating” before becoming widely accepted tools. This positioning attempts to frame their controversial product as simply the next step in technological assistance rather than an ethical transgression.

Controversy and Public Reaction

The AI cheating tool has sparked intense debate online, particularly following Cluely’s release of a promotional video showing Lee using the hidden AI assistant during a date at an upscale restaurant. In the demonstration, Lee attempts to impress his date by secretly using the AI to provide information about art and to lie about his age—a scenario that many viewers found disturbing.

Public reaction to the AI cheating tool has been sharply divided. While some praise the founders’ brazen marketing approach and technical innovation, others express serious concerns about the ethical implications of technology designed specifically to enable dishonesty. Critics have compared the concept to episodes of dystopian science fiction, questioning whether such tools represent progress or a concerning erosion of trust in human interactions.

Supporters Argue

- The AI cheating tool merely levels the playing field in an already flawed evaluation system

- Technical interviews based on LeetCode are poor indicators of actual job performance

- Similar assistance tools like calculators were once considered “cheating”

- The technology represents innovation and entrepreneurial spirit

Critics Argue

- The AI cheating tool explicitly promotes dishonesty and misrepresentation

- It could undermine trust in educational assessments and hiring processes

- Unlike calculators, it’s designed to deceive rather than to be used transparently

- The promotional material celebrates lying in personal relationships

Business Success Despite Ethical Questions

Despite—or perhaps because of—the controversy surrounding it, the AI cheating tool appears to be achieving significant commercial success. In his announcement, Lee claimed that Cluely has already surpassed $3 million in annual recurring revenue (ARR) earlier this month, suggesting strong market demand for the controversial product.

“I got kicked out of Columbia for building Interview Coder. Today, I’m announcing our $5.3M seed round for @ClueLyhq, from @abstractvc and @susaventures. We help people cheat on everything. Earlier this month, we crossed $3M in ARR.”

— Chungin “Roy” Lee, CEO of Cluely

The substantial seed funding from respected venture capital firms indicates that despite ethical concerns, investors see significant potential in the AI cheating tool. This financial vote of confidence raises questions about the values being prioritized in the tech investment landscape, where growth and innovation potential may sometimes outweigh considerations about a product’s societal impact.

Ethical Implications and Future Concerns

The emergence and apparent success of this AI cheating tool raises profound questions about the future of assessment, trust, and authenticity in various contexts. If tools like Cluely become widespread, there could be far-reaching implications for education, hiring practices, and even personal relationships.

Potential Broader Implications

The normalization of AI cheating tools could trigger an arms race between assistance technologies and detection methods. Educational institutions and employers may need to fundamentally rethink evaluation approaches, potentially moving away from knowledge-based testing toward assessments that AI cannot easily assist with. This might include more in-person, practical demonstrations of skills or collaborative project work where continuous observation makes AI assistance less feasible.

Cluely isn’t alone in pushing ethical boundaries in AI development. The article notes that earlier this month, another controversial AI startup launched with the stated mission of “replacing all human workers everywhere,” highlighting a trend of provocative AI ventures that challenge societal norms and raise ethical concerns.

As AI cheating tools and other controversial AI applications continue to emerge, society faces important questions about the values we wish to embed in our technological future. The success or failure of ventures like Cluely may serve as an important indicator of where those boundaries ultimately lie.

Published on April 21, 2025